# pyscience imports

import numpy as np

import pandas as pd

pd.set_option('float_format', '{:f}'.format)

# viz

import matplotlib.pyplot as plt

import plotnine as pn

font = {'family' : 'IBM Plex Sans',

'weight' : 'normal',

'size' : 10}

plt.rc('font', **font)Data: 1_000_000 Bandcamp Sales

_id: unique identifier combining the sale’s URL and UTC timestamp.url: the path to the item on Bandcamp. Use this column to join this dataset to the dataset of Bandcamp items.artist_name: Name of the artist.album_title: Title of the album, if applicable.art_url: path to the item’s art image.item_type: denotes the type of object. a for digital albums, p for physical items, and t for digital tracks.slug_type: also denotes the type of object. a for all albums, p for merch, and t for tracks.utc_date: the UTC timestamp of the sale datetime.country_code: country code of the buyer.country: full country code name of the buyer.item_price: price of the item in the seller’s currency.currency: the seller’s currency.amount_paid: amount paid in the seller’s currency.amount_paid_fmt: amount paid in the seller’s currency, with the currency symbol.amount_paid_usd: amount paid converted to US Dollars.amount_over_fmt: amount voluntarily paid over the item price in the seller’s currency.

dataroot = "/home/alal/tmp/scratch_data/000_musicData/"

df = pd.read_csv(dataroot+"1000000-bandcamp-sales.csv").infer_objects()

df['utc_date2'] = pd.to_datetime(df['utc_date'], unit='s')

df['date'], df['hour'] = df.utc_date2.dt.date, df.utc_date2.dt.strftime('%H')

df['country2'] = np.where(df.country.isin(df.country.value_counts()[:10].index), df.country, 'Other')

df.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1000000 entries, 0 to 999999

Data columns (total 28 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Unnamed: 0 1000000 non-null int64

1 _id 1000000 non-null object

2 art_url 1000000 non-null object

3 item_type 1000000 non-null object

4 utc_date 1000000 non-null float64

5 country_code 1000000 non-null object

6 track_album_slug_text 2237 non-null object

7 country 1000000 non-null object

8 slug_type 988416 non-null object

9 amount_paid_fmt 1000000 non-null object

10 item_price 1000000 non-null float64

11 item_description 999977 non-null object

12 art_id 764520 non-null float64

13 url 1000000 non-null object

14 amount_paid 1000000 non-null float64

15 releases 11584 non-null float64

16 artist_name 999990 non-null object

17 currency 1000000 non-null object

18 album_title 356461 non-null object

19 amount_paid_usd 1000000 non-null float64

20 package_image_id 232708 non-null float64

21 amount_over_fmt 119133 non-null object

22 item_slug 21742 non-null object

23 addl_count 21742 non-null float64

24 utc_date2 1000000 non-null datetime64[ns]

25 date 1000000 non-null object

26 hour 1000000 non-null object

27 country2 1000000 non-null object

dtypes: datetime64[ns](1), float64(8), int64(1), object(18)

memory usage: 213.6+ MBEDA

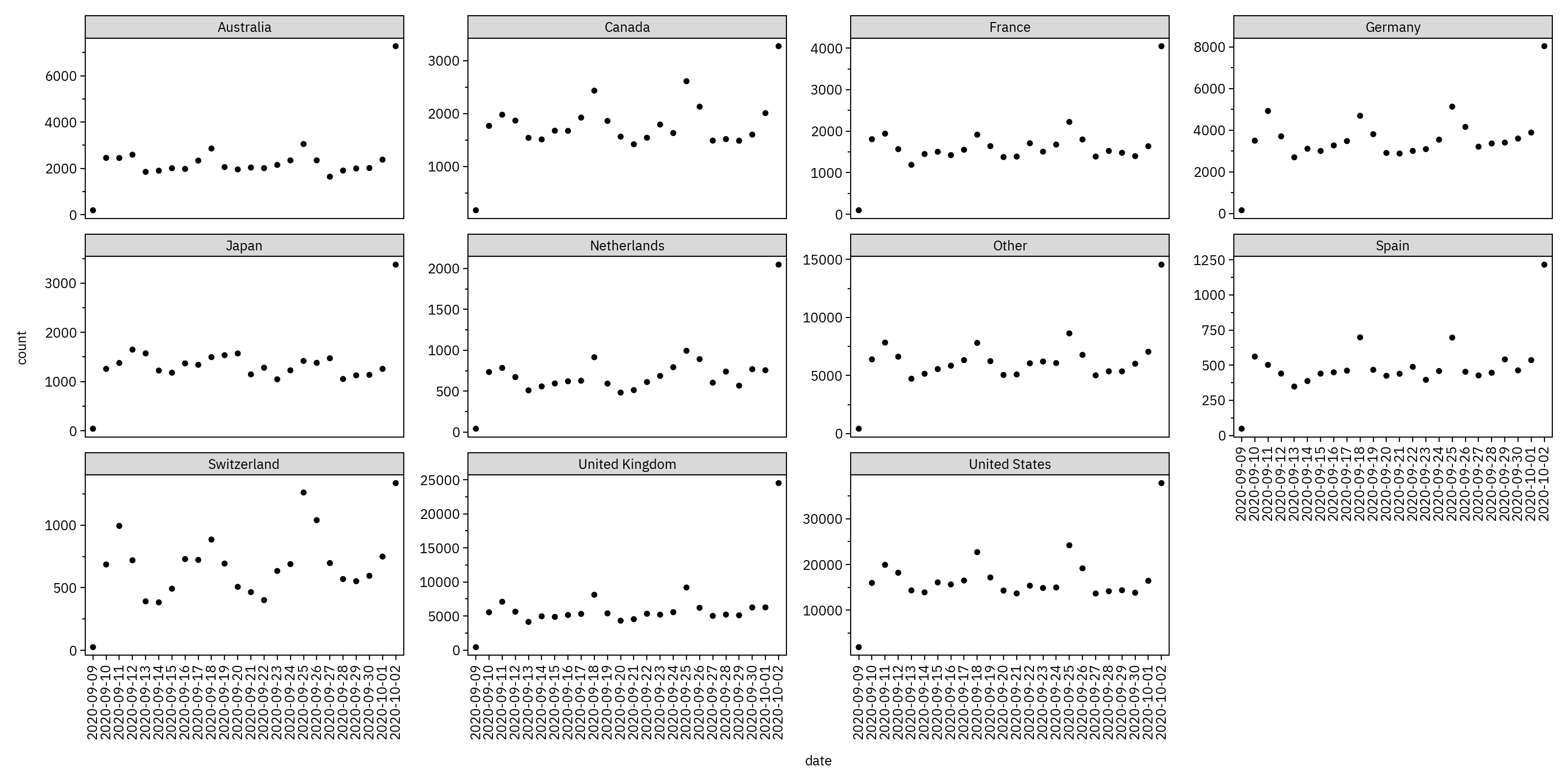

grouped_day_level = df.groupby(['country2', 'date']).size().reset_index(name='count')

(

pn.ggplot(grouped_day_level, pn.aes(x="date", y="count"))

+ pn.geom_point()

+ pn.facet_wrap("country2", scales="free_y")

+ pn.theme_matplotlib()

+ pn.theme(figure_size=(16, 8), axis_text_x=pn.element_text(angle=90))

)

Strong weekend effects.

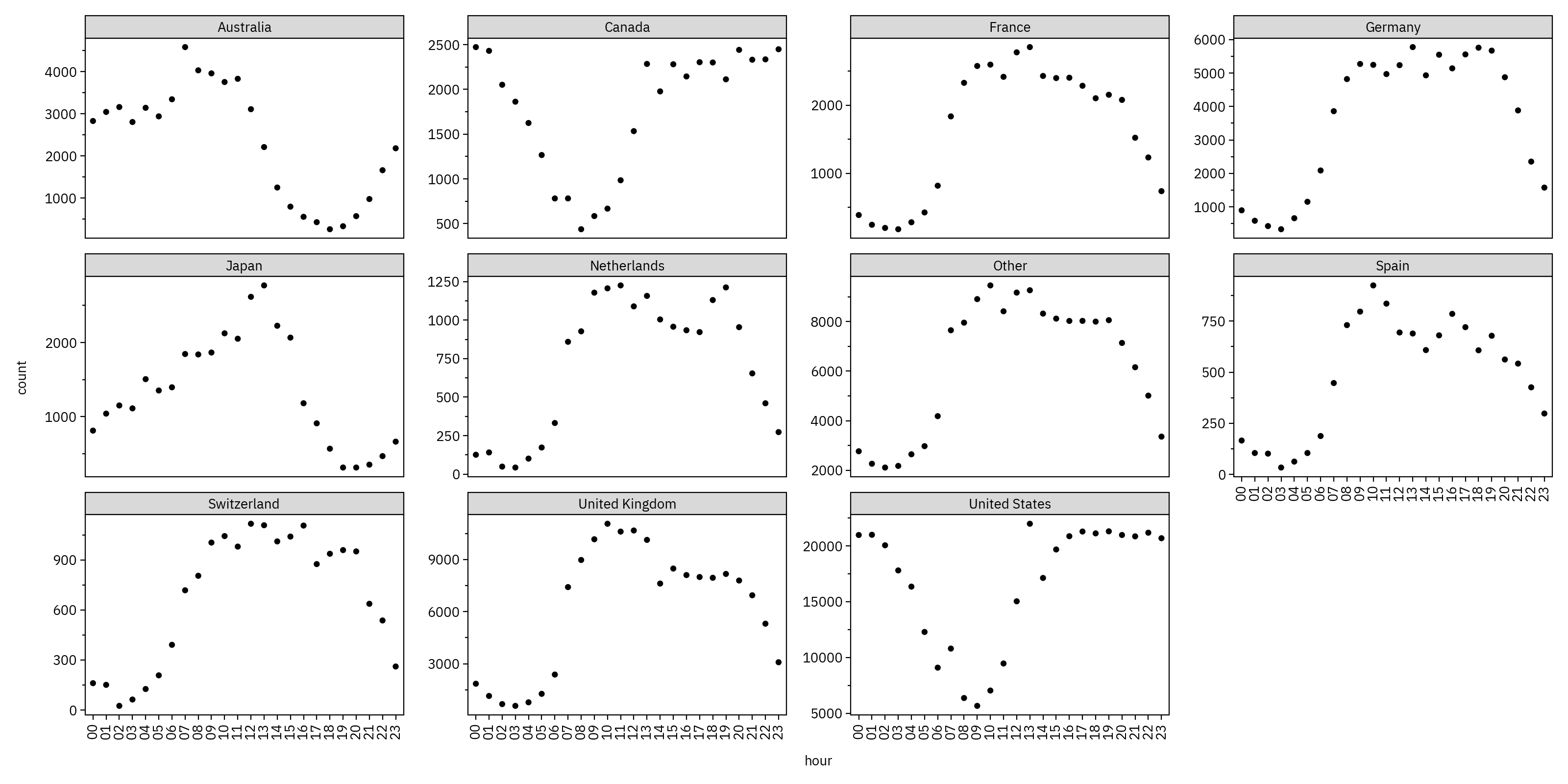

grouped_hour_level = df.groupby(['country2', 'hour']).size().reset_index(name='count')

(

pn.ggplot(grouped_hour_level, pn.aes(x='hour', y="count"))

+ pn.geom_point()

+ pn.facet_wrap("country2", scales="free_y")

+ pn.theme_matplotlib()

+ pn.theme(figure_size=(16, 8),

axis_text_x=pn.element_text(angle=90)

)

)

Peaks during the workday (~9 AM).

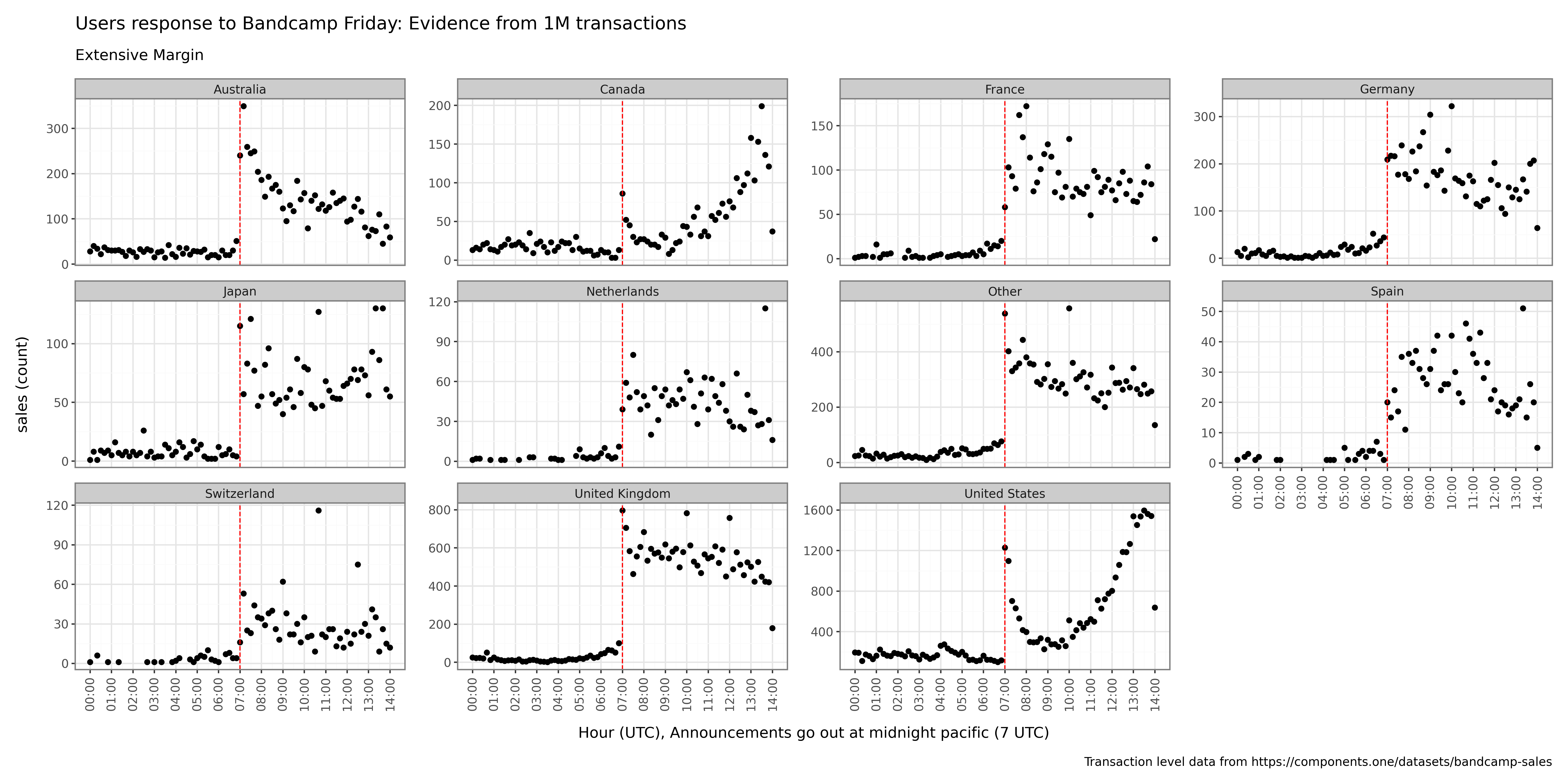

bandcamp fridays (‘RD in Time’)

We bin the data into 10 minute windows for a high-resolution look around the midnight pacific announcement.

bcf = (df

.query("utc_date2.dt.date == @pd.to_datetime('2020-10-02').date()")

.assign(time_k_min=lambda x: x['utc_date2'].dt.floor('10min'))

.groupby(['country2', 'time_k_min']).size().reset_index(name='count')

)

bcf['time_label'] = bcf['time_k_min'].dt.strftime('%H:%M')

bcf['hours'] = bcf['time_k_min'].dt.hour + bcf['time_k_min'].dt.minute / 60

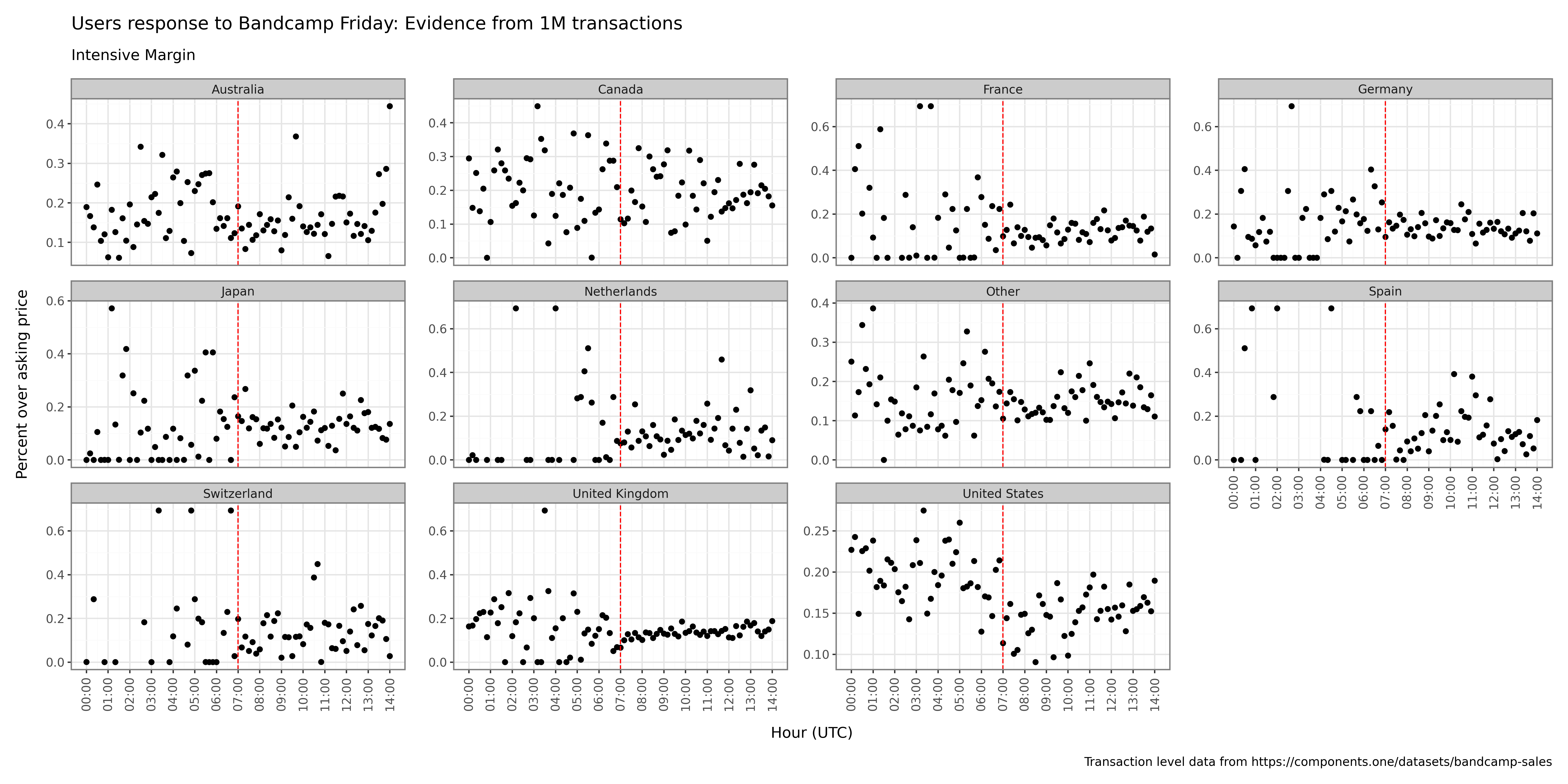

# intensive margin

df['pct_over'] = np.where(df.item_price > 0, (df.amount_paid - df.item_price) / df.item_price, 1)

df['pct_over'] = np.clip(df['pct_over'], 0, 1) # cap at 100%

gener = (

df

.query("utc_date2.dt.date == @pd.to_datetime('2020-10-02').date()")

.assign(time_k_min=lambda x: x['utc_date2'].dt.floor('10min'))

.groupby(['country2', 'time_k_min']).pct_over.mean().reset_index(name='generosity')

)

bcf = bcf.merge(gener, on=['country2', 'time_k_min'])

bcf['log1p_generosity'] = np.log1p(bcf['generosity'])# Create plots

f1 = (

pn.ggplot(bcf, pn.aes(x="hours", y="count"))

+ pn.geom_point()

+ pn.geom_vline(xintercept=7-.01, linetype="dashed", color="red")

+ pn.facet_wrap("country2", scales="free_y")

+ pn.theme_bw()

+ pn.theme(

figure_size=(16, 8),

axis_text_x=pn.element_text(angle=90),

dpi=200,

)

+ pn.labs(

title="Users response to Bandcamp Friday: Evidence from 1M transactions",

subtitle="Extensive Margin",

y="sales (count)",

x="Hour (UTC), Announcements go out at midnight pacific (7 UTC)",

caption="Transaction level data from https://components.one/datasets/bandcamp-sales",

)

+ pn.scale_x_continuous(

breaks=range(0, 25), labels=lambda l: [f"{int(x):02d}:00" for x in l]

))

f1

Very strong effect across all countries, including (perhaps surprisingly), the US and Canada, where the the bump in transactions is likely driven by midnight shoppers on the west coast.

# Create the plot

f2 = (

pn.ggplot(bcf, pn.aes(x="hours", y="log1p_generosity"))

+ pn.geom_point()

+ pn.geom_vline(xintercept=7-.01, linetype="dashed", color="red")

+ pn.facet_wrap("country2", scales="free_y")

+ pn.theme_bw()

+ pn.theme(

figure_size=(16, 8),

axis_text_x=pn.element_text(angle=90),

dpi=200,

)

+ pn.labs(

title="Users response to Bandcamp Friday: Evidence from 1M transactions",

subtitle="Intensive Margin",

y="Percent over asking price",

x="Hour (UTC)",

caption="Transaction level data from https://components.one/datasets/bandcamp-sales",

)

+ pn.scale_x_continuous(

breaks=range(0, 25), labels=lambda l: [f"{int(x):02d}:00" for x in l]

)

)

f2

Since buyers can set their own price, we can compute their generosity in terms of the percentage over the asking price. Generosity doesn’t change much around the annoucement. So we conclude that the bandcamp friday effect is driven by the extensive margin (transactions) rather than the intensive margin (generosity).