papers

-

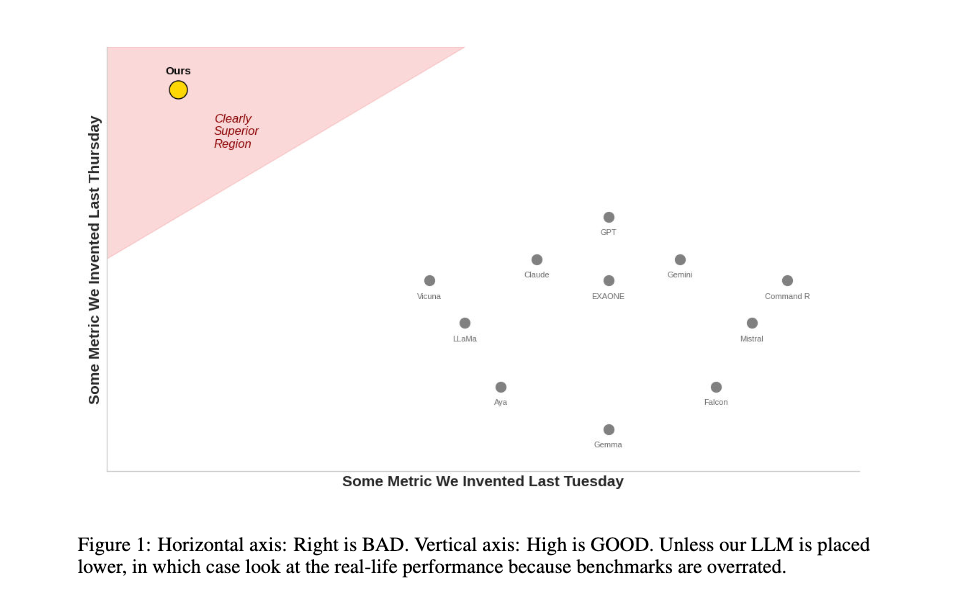

SIGBOVIK 2025 proceedings. For those living under a rock, SIGBOVIK is the only good CS conference and retains what husk of a soul remains in computing and adjacent fields. too many classics to list

- LLMs are all you need

- Uppercase is all you need

- Maximum divergence is all you need

- “The compilation strategy of ccdoom is straightforward and efficient: it ignores the user’s program, echoes the word “doom”, and outputs a copy of DOOM, id Software’s acclaimed 1993 video game”

- “Damn, Turing kinda got hands”

- Quintuple-Blind Peer Review

- “Maximum Novelty in Robotics Research via Strategic Copy-Paste: An Information-Theoretic Recipe for Paper Generation”

-

Qu et al: surprising equivalence result between MLE for logit and Sinkhorn’s matrix balancing problem, which then connects to optimal transport.

-

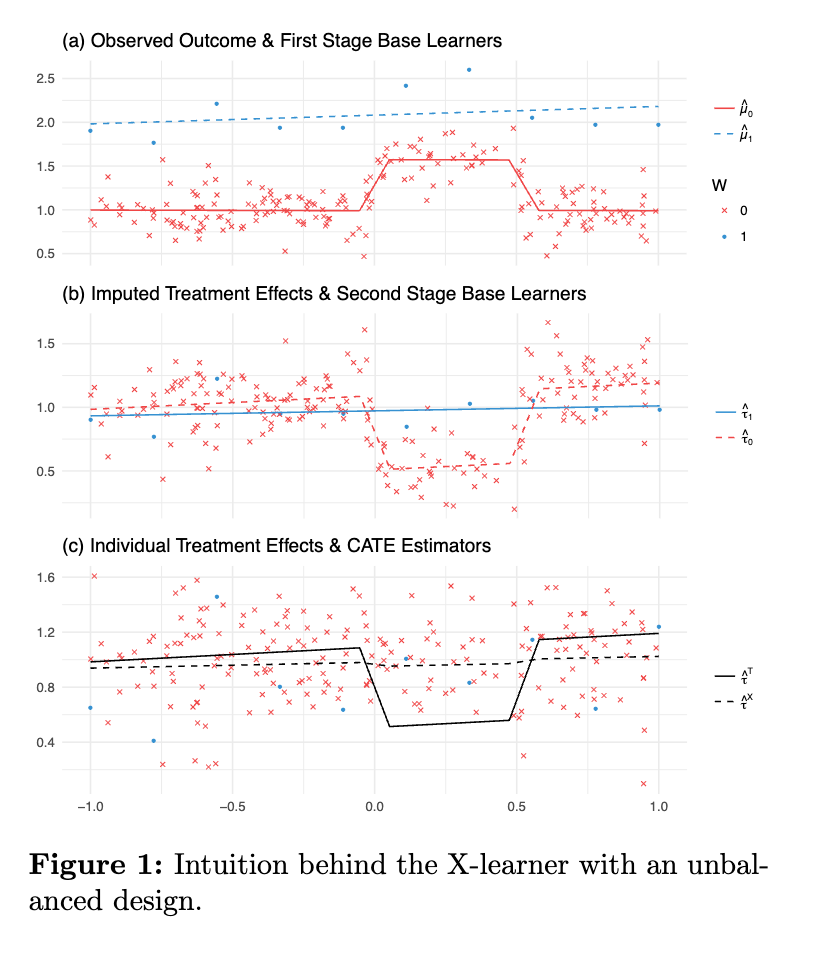

Liu et al nice monograph on estimands and estimators under treatment-effect heterogeneity. Represents a productive output of a protracted back-and-forth between data-colada and Hainmueller/Mummolo/Xu that was borne out of colada folks’ frankly baffling misunderstanding of the underlying estimand and modern causal inference more broadly, over-reliance on linear models, and general belligerence. Assuming incompetence or malfeasance of your interlocutor might be a reasonable heuristic in social psych, but not in most other fields [CoI: i am not a neutral party to this exchange].

-

Ahrens et al nice self-contained review of essential ingredients of DML (neyman orthogonality, cross fitting)

-

Kim et al Counterfactual MVO. Nice causal-inference angle on a classically finance-focussed optimization problem that rules the world.

-

Coulombe on OLS as an attention mechanism. A paper that walks through the essential ingredients of attention (→ transformers → LLMs) using trusty old OLS. Sometimes stretches credulity / verges on self-parody [of economists shoehorning absolutely everything into least squares to understand it], but fun idea nevertheless.

- Related: Bruns-Smith et al is a nice paper showing that (a particular flavor of) double-debiased machine learning can also be viewed entirely through the lens of regularized regression.

-

Fernandez-Loria something of an inane hot take from a trad ML person about causal inference. Trivially true in one sense, utterly useless in another. Exhibit A - what’s the trad supervised learning trick for this?

links

-

Instant SQL from the duckdb crew

-

advanced python techniques primarily OOP [generics, typing], operator overloading, context managers, and a frankly diabolical keyword-only and positional-only way to define arguments that makes this

def foo(a, b, /, c, d, *, e, f)a valid function signature.