Indexes¶

Today we're going to be talking about pandas' Indexes.

They're essential to pandas, but can be a difficult concept to grasp at first.

I suspect this is partly because they're unlike what you'll find in SQL or R.

Indexes offer

- a metadata container

- easy label-based row selection and assignment

- easy label-based alignment in operations

One of my first tasks when analyzing a new dataset is to identify a unique identifier for each observation, and set that as the index. It could be a simple integer, or like in our first chapter, it could be several columns (carrier, origin dest, tail_num date).

To demonstrate the benefits of proper Index use, we'll first fetch some weather data from sensors at a bunch of airports across the US.

See here for the example scraper I based this off of.

Those uninterested in the details of fetching and prepping the data and skip past it.

At a high level, here's how we'll fetch the data: the sensors are broken up by "network" (states).

We'll make one API call per state to get the list of airport IDs per network (using get_ids below).

Once we have the IDs, we'll again make one call per state getting the actual observations (in get_weather).

Feel free to skim the code below, I'll highlight the interesting bits.

%matplotlib inline

import os

import json

import glob

import datetime

from io import StringIO

import requests

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import prep

sns.set_style('ticks')

pd.options.display.max_rows = 10

# States are broken into networks. The networks have a list of ids, each representing a station.

# We will take that list of ids and pass them as query parameters to the URL we built up ealier.

states = """AK AL AR AZ CA CO CT DE FL GA HI IA ID IL IN KS KY LA MA MD ME

MI MN MO MS MT NC ND NE NH NJ NM NV NY OH OK OR PA RI SC SD TN TX UT VA VT

WA WI WV WY""".split()

# IEM has Iowa AWOS sites in its own labeled network

networks = ['AWOS'] + ['{}_ASOS'.format(state) for state in states]

def get_weather(stations, start=pd.Timestamp('2017-01-01'),

end=pd.Timestamp('2017-01-31')):

'''

Fetch weather data from MESONet between ``start`` and ``stop``.

'''

url = ("http://mesonet.agron.iastate.edu/cgi-bin/request/asos.py?"

"&data=tmpf&data=relh&data=sped&data=mslp&data=p01i&data=v"

"sby&data=gust_mph&data=skyc1&data=skyc2&data=skyc3"

"&tz=Etc/UTC&format=comma&latlon=no"

"&{start:year1=%Y&month1=%m&day1=%d}"

"&{end:year2=%Y&month2=%m&day2=%d}&{stations}")

stations = "&".join("station=%s" % s for s in stations)

weather = (pd.read_csv(url.format(start=start, end=end, stations=stations),

comment="#")

.rename(columns={"valid": "date"})

.rename(columns=str.strip)

.assign(date=lambda df: pd.to_datetime(df['date']))

.set_index(["station", "date"])

.sort_index())

float_cols = ['tmpf', 'relh', 'sped', 'mslp', 'p01i', 'vsby', "gust_mph"]

weather[float_cols] = weather[float_cols].apply(pd.to_numeric, errors="corce")

return weather

def get_ids(network):

url = "http://mesonet.agron.iastate.edu/geojson/network.php?network={}"

r = requests.get(url.format(network))

md = pd.io.json.json_normalize(r.json()['features'])

md['network'] = network

return md

There isn't too much in get_weather worth mentioning, just grabbing some CSV files from various URLs.

They put metadata in the "CSV"s at the top of the file as lines prefixed by a #.

Pandas will ignore these with the comment='#' parameter.

I do want to talk briefly about the gem of a method that is json_normalize .

The weather API returns some slightly-nested data.

url = "http://mesonet.agron.iastate.edu/geojson/network.php?network={}"

r = requests.get(url.format("AWOS"))

js = r.json()

js['features'][:2]

If we just pass that list off to the DataFrame constructor, we get this.

pd.DataFrame(js['features']).head()

In general, DataFrames don't handle nested data that well.

It's often better to normalize it somehow.

In this case, we can "lift"

the nested items (geometry.coordinates, properties.sid, and properties.sname)

up to the top level.

pd.io.json.json_normalize(js['features'])

Sure, it's not that difficult to write a quick for loop or list comprehension to extract those,

but that gets tedious.

If we were using the latitude and longitude data, we would want to split

the geometry.coordinates column into two. But we aren't so we won't.

Going back to the task, we get the airport IDs for every network (state)

with get_ids. Then we pass those IDs into get_weather to fetch the

actual weather data.

import os

ids = pd.concat([get_ids(network) for network in networks], ignore_index=True)

gr = ids.groupby('network')

store = 'data/weather.h5'

if not os.path.exists(store):

os.makedirs("data/weather", exist_ok=True)

for k, v in gr:

weather = get_weather(v['id'])

weather.to_csv("data/weather/{}.csv".format(k))

weather = pd.concat([

pd.read_csv(f, parse_dates=['date'], index_col=['station', 'date'])

for f in glob.glob('data/weather/*.csv')

]).sort_index()

weather.to_hdf("data/weather.h5", "weather")

else:

weather = pd.read_hdf("data/weather.h5", "weather")

weather.head()

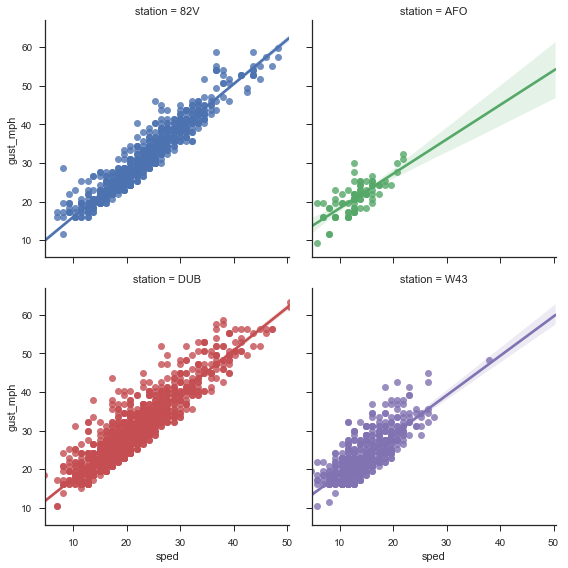

OK, that was a bit of work. Here's a plot to reward ourselves.

airports = ['W43', 'AFO', '82V', 'DUB']

g = sns.FacetGrid(weather.loc[airports].reset_index(),

col='station', hue='station', col_wrap=2, size=4)

g.map(sns.regplot, 'sped', 'gust_mph')

Set Operations¶

Indexes are set-like (technically multisets, since you can have duplicates), so they support most python set operations. Since indexes are immutable you won't find any of the inplace set operations.

One other difference is that since Indexes are also array-like, you can't use some infix operators like - for difference. If you have a numeric index it is unclear whether you intend to perform math operations or set operations.

You can use & for intersection, | for union, and ^ for symmetric difference though, since there's no ambiguity.

For example, lets find the set of airports that we have both weather and flight information on. Since weather had a MultiIndex of airport, datetime, we'll use the levels attribute to get at the airport data, separate from the date data.

# Bring in the flights data

flights = pd.read_hdf('data/flights.h5', 'flights')

weather_locs = weather.index.levels[0]

# The `categories` attribute of a Categorical is an Index

origin_locs = flights.origin.cat.categories

dest_locs = flights.dest.cat.categories

airports = weather_locs & origin_locs & dest_locs

airports

print("Weather, no flights:\n\t", weather_locs.difference(origin_locs | dest_locs), end='\n\n')

print("Flights, no weather:\n\t", (origin_locs | dest_locs).difference(weather_locs), end='\n\n')

print("Dropped Stations:\n\t", (origin_locs | dest_locs) ^ weather_locs)

Flavors¶

Pandas has many subclasses of the regular Index, each tailored to a specific kind of data.

Most of the time these will be created for you automatically, so you don't have to worry about which one to choose.

IndexInt64IndexRangeIndex: Memory-saving special case ofInt64IndexFloatIndexDatetimeIndex: Datetime64[ns] precision dataPeriodIndex: Regularly-spaced, arbitrary precision datetime data.TimedeltaIndexCategoricalIndexMultiIndex

You will sometimes create a DatetimeIndex with pd.date_range (pd.period_range for PeriodIndex).

And you'll sometimes make a MultiIndex directly too (I'll have an example of this in my post on performace).

Some of these specialized index types are purely optimizations; others use information about the data to provide additional methods. And while you might occasionally work with indexes directly (like the set operations above), most of they time you'll be operating on a Series or DataFrame, which in turn makes use of its Index.

Row Slicing¶

We saw in part one that they're great for making row subsetting as easy as column subsetting.

weather.loc['DSM'].head()

Without indexes we'd probably resort to boolean masks.

weather2 = weather.reset_index()

weather2[weather2['station'] == 'DSM'].head()

Slightly less convenient, but still doable.

Indexes for Easier Arithmetic, Analysis¶

It's nice to have your metadata (labels on each observation) next to you actual values. But if you store them in an array, they'll get in the way of your operations. Say we wanted to translate the Fahrenheit temperature to Celsius.

# With indecies

temp = weather['tmpf']

c = (temp - 32) * 5 / 9

c.to_frame()

# without

temp2 = weather.reset_index()[['station', 'date', 'tmpf']]

temp2['tmpf'] = (temp2['tmpf'] - 32) * 5 / 9

temp2.head()

Again, not terrible, but not as good.

And, what if you had wanted to keep Fahrenheit around as well, instead of overwriting it like we did?

Then you'd need to make a copy of everything, including the station and date columns.

We don't have that problem, since indexes are immutable and safely shared between DataFrames / Series.

temp.index is c.index

Indexes for Alignment¶

I've saved the best for last. Automatic alignment, or reindexing, is fundamental to pandas.

All binary operations (add, multiply, etc.) between Series/DataFrames first align and then proceed.

Let's suppose we have hourly observations on temperature and windspeed. And suppose some of the observations were invalid, and not reported (simulated below by sampling from the full dataset). We'll assume the missing windspeed observations were potentially different from the missing temperature observations.

dsm = weather.loc['DSM']

hourly = dsm.resample('H').mean()

temp = hourly['tmpf'].sample(frac=.5, random_state=1).sort_index()

sped = hourly['sped'].sample(frac=.5, random_state=2).sort_index()

temp.head().to_frame()

sped.head()

Notice that the two indexes aren't identical.

Suppose that the windspeed : temperature ratio is meaningful.

When we go to compute that, pandas will automatically align the two by index label.

sped / temp

This lets you focus on doing the operation, rather than manually aligning things, ensuring that the arrays are the same length and in the same order.

By deault, missing values are inserted where the two don't align.

You can use the method version of any binary operation to specify a fill_value

sped.div(temp, fill_value=1)

And since I couldn't find anywhere else to put it, you can control the axis the operation is aligned along as well.

hourly.div(sped, axis='index')

The non row-labeled version of this is messy.

temp2 = temp.reset_index()

sped2 = sped.reset_index()

# Find rows where the operation is defined

common_dates = pd.Index(temp2.date) & sped2.date

pd.concat([

# concat to not lose date information

sped2.loc[sped2['date'].isin(common_dates), 'date'],

(sped2.loc[sped2.date.isin(common_dates), 'sped'] /

temp2.loc[temp2.date.isin(common_dates), 'tmpf'])],

axis=1).dropna(how='all')

And we have a bug in there. Can you spot it?

I only grabbed the dates from sped2 in the line sped2.loc[sped2['date'].isin(common_dates), 'date'].

Really that should be sped2.loc[sped2.date.isin(common_dates)] | temp2.loc[temp2.date.isin(common_dates)].

But I think leaving the buggy version states my case even more strongly. The temp / sped version where pandas aligns everything is better.

pd.concat([temp, sped], axis=1).head()

The axis parameter controls how the data should be stacked, 0 for vertically, 1 for horizontally.

The join parameter controls the merge behavior on the shared axis, (the Index for axis=1). By default it's like a union of the two indexes, or an outer join.

pd.concat([temp, sped], axis=1, join='inner')

Merge Version¶

Since we're joining by index here the merge version is quite similar. We'll see an example later of a one-to-many join where the two differ.

pd.merge(temp.to_frame(), sped.to_frame(), left_index=True, right_index=True).head()

pd.merge(temp.to_frame(), sped.to_frame(), left_index=True, right_index=True,

how='outer').head()

Like I said, I typically prefer concat to merge.

The exception here is one-to-many type joins. Let's walk through one of those,

where we join the flight data to the weather data.

To focus just on the merge, we'll aggregate hour weather data to be daily, rather than trying to find the closest recorded weather observation to each departure (you could do that, but it's not the focus right now). We'll then join the one (airport, date) record to the many (airport, date, flight) records.

Quick tangent, to get the weather data to daily frequency, we'll need to resample (more on that in the timeseries section). The resample essentially splits the recorded values into daily buckets and computes the aggregation function on each bucket. The only wrinkle is that we have to resample by station, so we'll use the pd.TimeGrouper helper.

idx_cols = ['unique_carrier', 'origin', 'dest', 'tail_num', 'fl_num', 'fl_date']

data_cols = ['crs_dep_time', 'dep_delay', 'crs_arr_time', 'arr_delay',

'taxi_out', 'taxi_in', 'wheels_off', 'wheels_on']

df = flights.set_index(idx_cols)[data_cols].sort_index()

def mode(x):

'''

Arbitrarily break ties.

'''

return x.value_counts().index[0]

aggfuncs = {'tmpf': 'mean', 'relh': 'mean',

'sped': 'mean', 'mslp': 'mean',

'p01i': 'mean', 'vsby': 'mean',

'gust_mph': 'mean', 'skyc1': mode,

'skyc2': mode, 'skyc3': mode}

# TimeGrouper works on a DatetimeIndex, so we move `station` to the

# columns and then groupby it as well.

daily = (weather.reset_index(level="station")

.groupby([pd.TimeGrouper('1d'), "station"])

.agg(aggfuncs))

daily.head()

Now that we have daily flight and weather data, we can merge.

We'll use the on keyword to indicate the columns we'll merge on (this is like a USING (...) SQL statement), we just have to make sure the names align.

flights.fl_date.unique()

flights.origin.unique()

daily.reset_index().date.unique()

daily.reset_index().station.unique()

The merge version¶

daily_ = (

daily

.reset_index()

.rename(columns={'date': 'fl_date', 'station': 'origin'})

.assign(origin=lambda x: pd.Categorical(x.origin,

categories=flights.origin.cat.categories))

)

flights.fl_date.unique()

daily.reset_index().date.unique()

m = pd.merge(flights, daily_,

on=['fl_date', 'origin']).set_index(idx_cols).sort_index()

m.head()

Since data-wrangling on its own is never the goal, let's do some quick analysis. Seaborn makes it easy to explore bivariate relationships.

Looking at the various sky coverage states:

m.groupby('skyc1').dep_delay.agg(['mean', 'count']).sort_values(by='mean')

import statsmodels.api as sm

Statsmodels (via patsy can automatically convert dummy data to dummy variables in a formula with the C function).

mod = sm.OLS.from_formula('dep_delay ~ C(skyc1) + tmpf + relh + sped + mslp', data=m)

res = mod.fit()

res.summary()

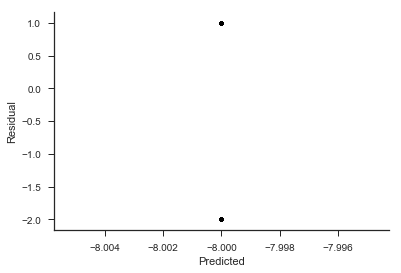

fig, ax = plt.subplots()

ax.scatter(res.fittedvalues, res.resid, color='k', marker='.', alpha=.25)

ax.set(xlabel='Predicted', ylabel='Residual')

sns.despine()

Those residuals should look like white noise. Looks like our linear model isn't flexible enough to model the delays, but I think that's enough for now.

We'll talk more about indexes in the Tidy Data and Reshaping section. Let me know if you have any feedback. Thanks for reading!