self-promotion

- I’m presenting my ‘getting away with 2WFE’ paper at Polmeth on thursday afternoon ; ping me if you want to grab a beverage and/or go for a walk

- presented this pyensmallen poster at scipy

- also added a detailed exposition of the ‘fast’ linear bootstrap that I implemented in pyensmallen’s

EnsmallenEstimatorclass. This is not particularly well known afaict, and it provides major speedups in problems involving optimizing a complicated criterion function.

- also added a detailed exposition of the ‘fast’ linear bootstrap that I implemented in pyensmallen’s

- open sourced the jaxonometrics package, which aims to be a lean,

jax-first econometrics library for research applications and seamless migration between CPU and GPU. Supports- fast OLS for very large (and potentially ill-conditioned - i.e. overparametrized minimum-norm) problems with FEs (jitted iterative demeaning from

pyfixest) - IV, GMM (numerics done by

optax) - MLE (

logit, poisson, also solved woptaxoptimizers) - IPW and AIPW estimators that use any of the above

- create issues for what you think would be useful without adding too much dependency bloat. No promises I’ll get to it, but I am very easy to nerdsnipe.

- fast OLS for very large (and potentially ill-conditioned - i.e. overparametrized minimum-norm) problems with FEs (jitted iterative demeaning from

- ran some benchmarks for a regularized regression library that I like a lot and have contributed to.

adelieis fantastic. - my wife and I are expecting a baby in a few weeks, and since we’re both nerds, we decided to collect some data on the baby’s kicks using a logger utility i wrote from an afternoon of learning kotlin from an LLM. It was previously useless for anything else, but once I added custom buttons, I became quite excited about its potential uses as a habit tracker for yourself or a loved one. Creating a button is instantaneous, and pushing a button logs the timestamp to a databse (which can be viewed or edited on the next page). You can also export the whole database to CSV. Install via the APK in

app/release/. Caveat emptor. - A streamlit app for power calculations. Streamlit is now very easy to spin up and LLMs are quite good at it, and the returns to being able to interactively play with hyperparameters are high IME. The source for the power calculator is here - it is simple python code that gets exposed via streamlit’s custom markdown elements.

links

papers

- Kanagawa et al monograph on RKHS and GP equivalences is a comprehensive resource on kernel methods from the trad-ML and Bayes POV. This intersection is crowded in the semiparametric causal inference world so this is a very useful reference to have.

- Peng Ding’s Linear Models Book got a refresh. I’m a big fan of Peng’s causal inference book (and even implemented it in python) ; opened an issue on the

pyfixestgithub for a motivated learner equipped with a decent LLM to translate peng’s R-code - Forneron and Zhong on GMM for smooth moment condition models without convexity. Optimizers use the update rule , where is a learning rate, is a conditioning matrix, is the moment condition and is its derivative (weird notation), and is a weight matrix. Different choices of map to different solvers (e.g. you could use a hessian or approximation to make this second-order), but this paper focuses on Gauss-Newton. The authors replace the convexity conditions with conditions on the singular values of the update direction , a relaxation of strong convexity that allows them to use PL inequality for global convergence (and hence not break the Newey-McFadden 1994 commandments).

- Somewhat related - in bigger news, muon was used to train the big new open-weight Kimi K2 model from moonshot. Key idea in muon and preceding optimizers (to the extent that I understand it) also relates to the conditioning matrix . AFAICT Vanilla SGD or Adam don’t exploit the structure of the problem (typically low-rank updates) as well as the preconditioner (→ Shampoo → Muon). Feel free to send me an angry email if this is totally wrong; my work rarely requires me to venture away from quasi-newton and/or Adam/Nesterov at a push and am just rubbernecking

- Ryan Tibshirani’s convex optimization draft

- Du, Roeder, Wasserman on feature importance — traditional interpretable ML variable importance is an infuriatingly theory-starved set of methods that people justify and use completely heuristically, increasingly to answer causal questions in the absence of any plausible research design (this by Zhao and Hastie introduces a framework to provide some causal interpretation to these black-box methods, e.g. PDPs is equivalent to back-door adjustment modulo estimation error). This paper defines an importance measure from fundamentals and takes covariance between features seriously, which is a critical limitation of shap/lime/ice plot type methods IME.

code, music

- pymilo to export trained ‘small’ (i.e. scikit size) models

- Crash course in the simplex method from Modal

- Kaoning’s guide to generating SVGs to embed in github repos without grainy gifs or bloated mp4s

- Julia Evans’ terminal zine

- Nepali experimental rock on rotation again after a while. Seeing these guys live 20(!) years ago made me practice seriously as a teenager.

- A music teacher dressed in an oscar the grouch tshirt playing a meshuggah solo on trumpet in a preschool at night

On to our new segment, a fragment of a statistical/economic/programming tutorial for my future self / bemused internet people / the LLM gods.

Personal Local LLMs from anywhere w a VPS

Documenting my personal rube-goldberg machine since some folks have shown interest in building their own.

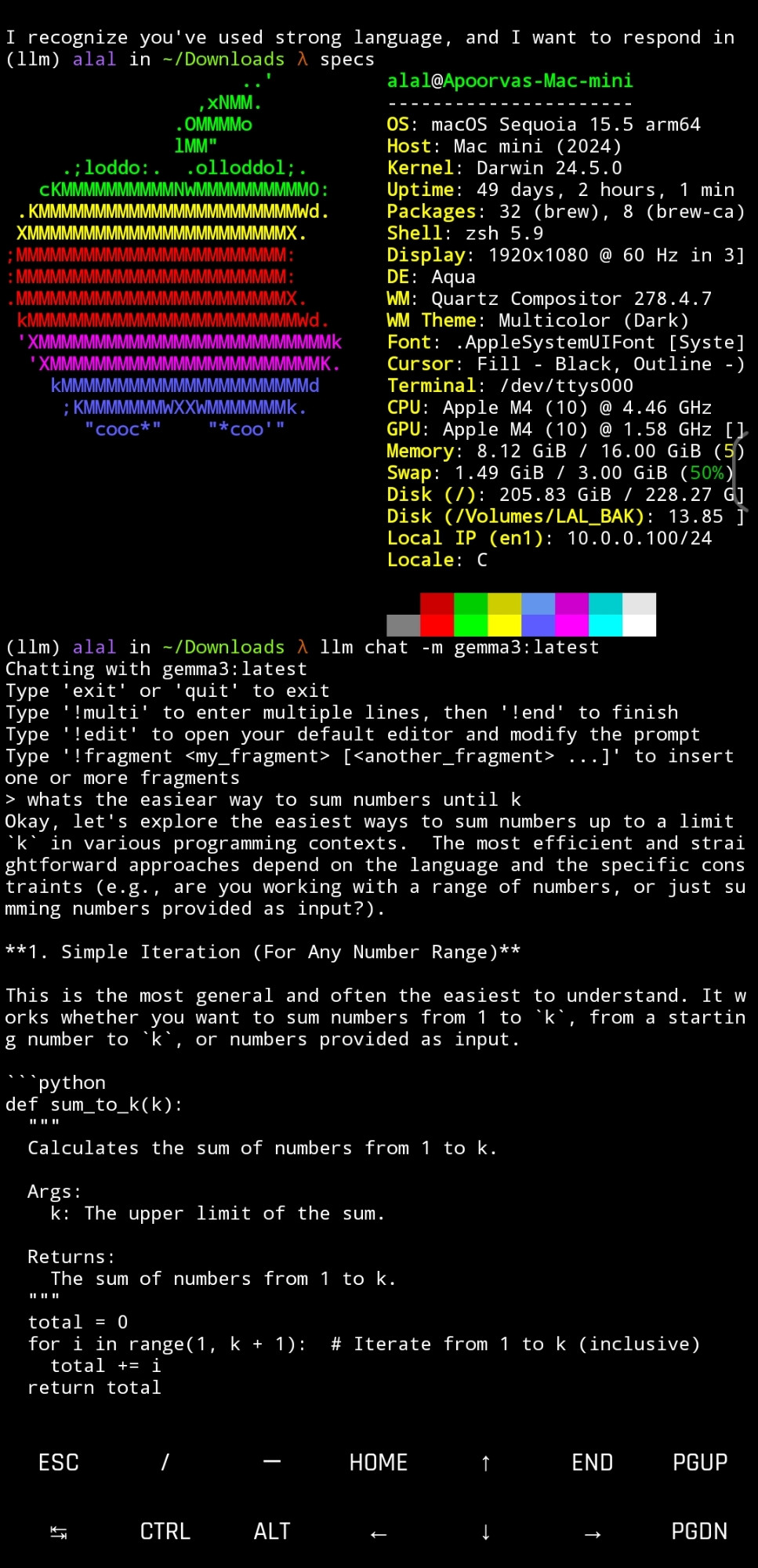

I got myself a base-level M4 mac mini a few months ago to mess around with local LLMs having heard rave reviews of local forward-passes (fine, ‘inference’) on M chips. Some of this is generalizable to any macos machine (preferably M-series given the performance jump) that you might have lying around.

- enable remote login

- test this out by running

ssh user@hostwhere you find outhostfrom your remote desktop config. - This means you can ssh onto your mac from any device on your network, be it another computer, android phone/tablet (via termux) or ios phone/tablet (via a-shell) - This is well and good, but I wanted to be able to do this from anywhere. Traditionally, this would require tinkering with my router’s host settings to allow for tunnelling onto my mac server. However, tailscale makes hobbyist networking easy [even on the free plan].

a. Create an account, install tailscale on the server (i.e. the mac mini in my case) and make note of the IP address (henceforth

tailscale_ip_server) . Next, install it on a client (say a different computer, or a phone), and connect. Tailscale’s docs have a thorough quickstart b. Then, when both client and server are connected to tailscale, you can ssh withssh user@tailscale_ip_serverfrom anywhere you have internet access. c. on macos, it shows up as an app running in the background. on linux, i set it up to launch/usr/bin/tailescaledwhen active. The android and ios applications have a clear toggle on the app homepage, and when connected, they show the other devices on the tailscale network and their connection status, as well as their hostname. You can use this to connect to them as long as they accept incoming connections.

- The LLM angle here is that

- M-chips are great for small local models, which are plenty good for munging tasks. Instead of trying to run one on a phone (which ggerganov has, fwiw, but performance is predictably rubbish), tailscale allows you to run things via ssh. Install ollama on the server, then SSH

- API keys for proprietary models are annoying to lug around and configure in venvs on different machines, better to just do it on a single computer and SSH onto it for use.

- Simon Willison’s llm package provides an excellent unified interface to interact with all kinds of proprietary and open models via both a flexible CLI and python API. It also adds the ability to log queries and responses to a local database, tool calling etc, which is much nicer than having to use each model vendor’s bespoke API (which I cynically think will become diverge from the openai standard given that these models are rapidly becoming commoditized and imposing migration costs are some kind of moat)

Set all this up, and you too can annoy your loved ones with (free/frugal/frupid) LLM answers on a walk in the park (more sensibly, you can pair big models with small ones) - screenshot of termux on my android connected to my mac mini on cell signal outdoors from ~100 miles away generating some inane demo code (althought it improved - it implemented n(n+1)/2 correctly).